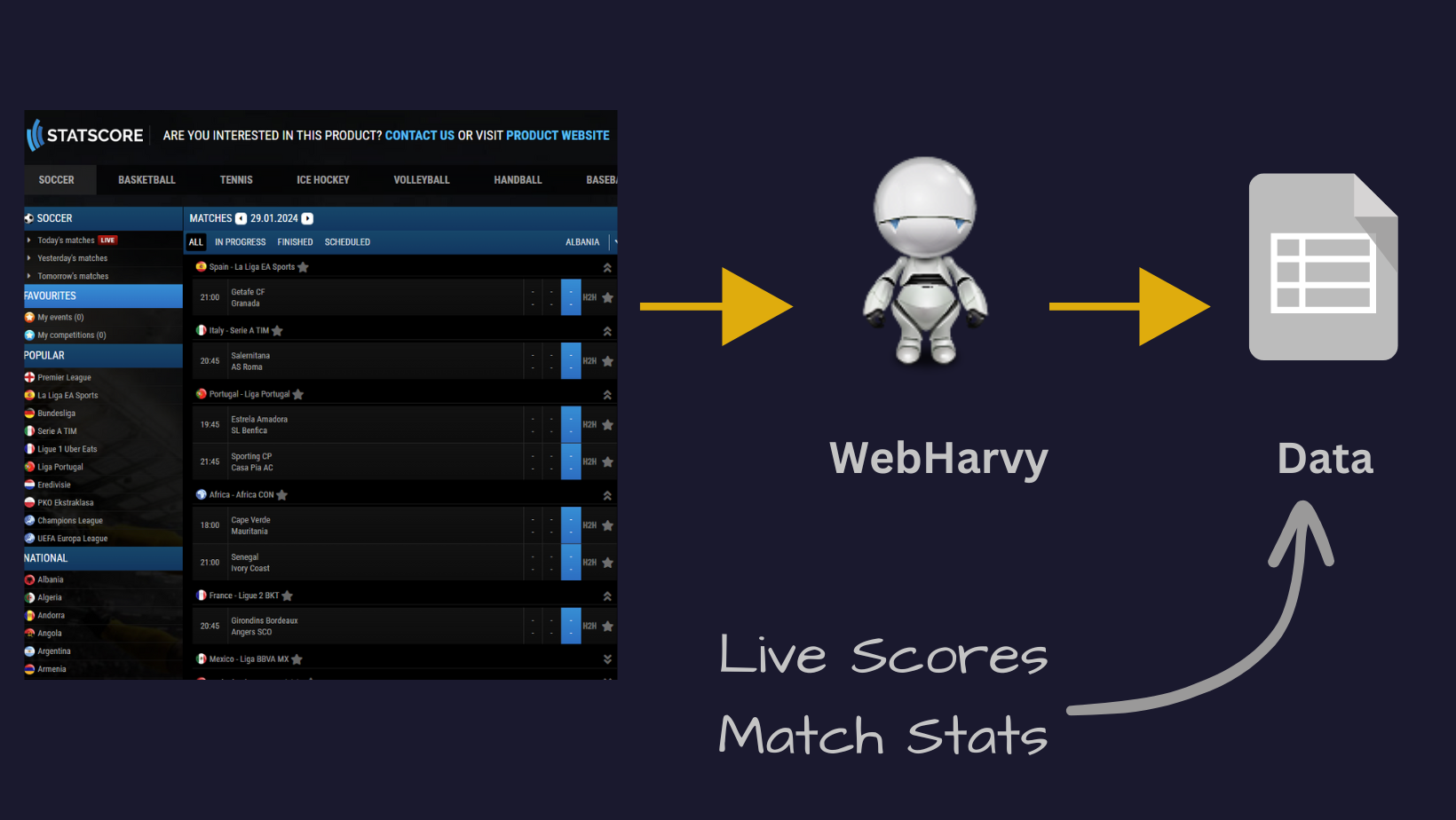

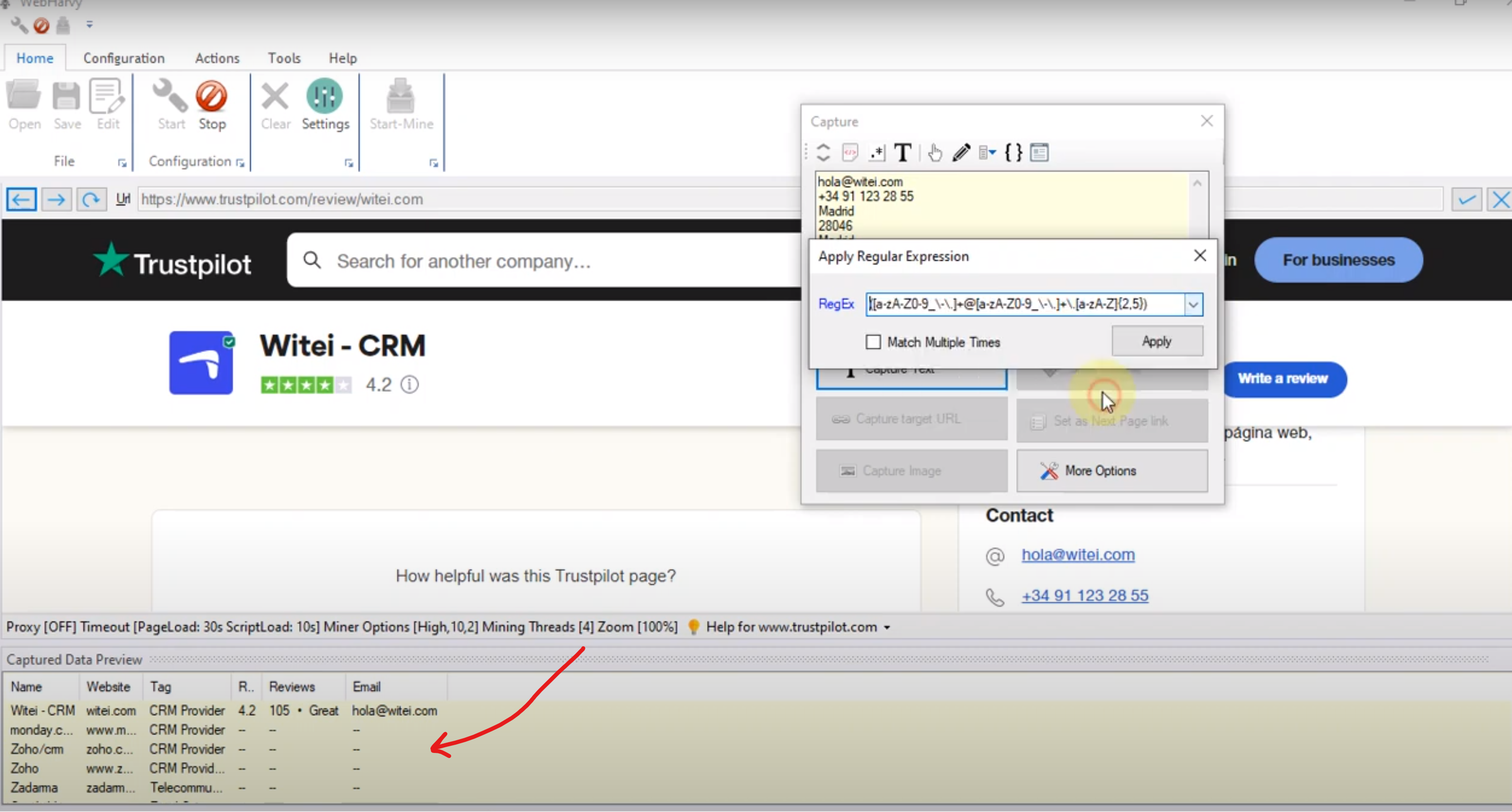

WebHarvy 7.3 – Keywords via Input-Text, Miner Options saved in Configuration etc.

The following are the main changes in Version 7.3 of WebHarvy. Support for adding keywords via the ‘Input-Text’ option There are websites where the search functionality is implemented such that the search keyword which user enters does not appear in the URL or POST data of the search results page. In these cases, if you … Read more